Just like in 2022, 2023, and 2024, we are once again sharing trends and our predictions for the year ahead and what they mean for the future of real-time video. In this blog you will learn how the shift from WebRTC to MOQ is starting, why real-time betting and interactive sports are accelerating, how AI… Continue reading Reflecting on 2025 and Sharing Live Streaming Trends for 2026

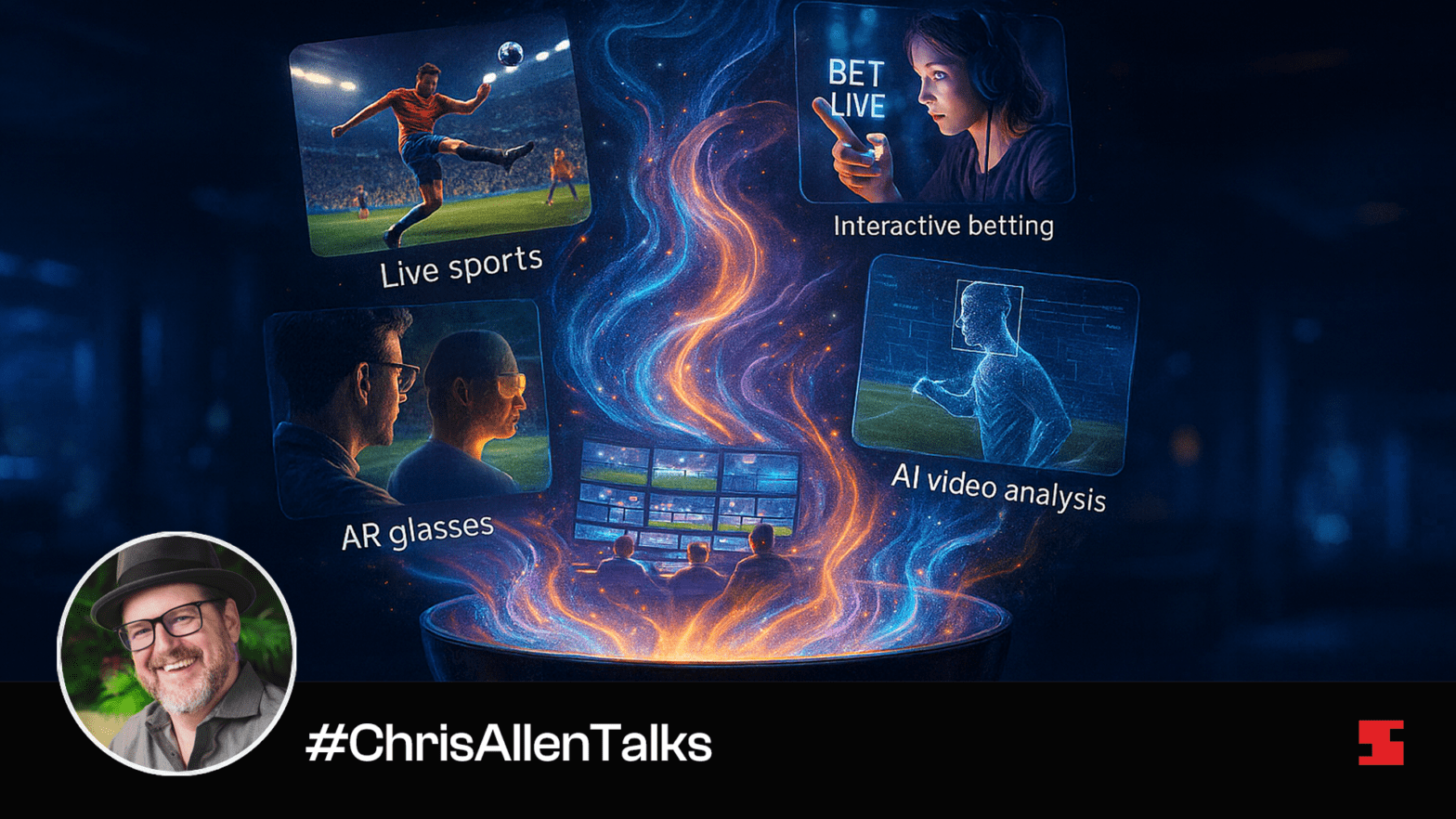

Just like in 2022, 2023, and 2024, we are once again sharing trends and our predictions for the year ahead and what they mean for the future of real-time video. In this blog you will learn how the shift from WebRTC to MOQ is starting, why real-time betting and interactive sports are accelerating, how AI is becoming part of live workflows, what is driving mainstream user-generated broadcasting, how AR glasses are getting closer to real-world use, and why real-time video will no longer be underestimated in 2026.

Watch my short talk on this topic on YouTube.

Table of Contents

Looking back at 2025

Let’s begin by revisiting our predictions from last year and discussing the changes we’ve seen, what worked, and what didn’t.

Virtual Sports: The Next Entertainment Frontier

What happened in 2025: The live sports streaming industry continued to grow rapidly, with digital live sports audiences expected to rise about 8.3% in 2025 compared to traditional broadcast viewership growth. This shift shows fans increasingly engage with live digital sports content, though no single virtual sports platform has yet broken into mainstream dominance.

Verdict: Trend progressed but mainstream breakout platform did not fully materialize.

Revolutionary In-Venue Fan Experiences

What happened in 2025: Broadcasters and tech platforms experimented with more interactive and immersive ways to present live sports. For example, by hosting our platform to enhance viewer engagement with sports and other live content, AWS was able to demonstrate how live streaming from its cloud resources can bring the many benefits of real-time streaming into play at NAB Show 2025. This demo highlighted interactive live viewing tools that combine live streaming with real-time analytics and personalization to enhance fan engagement.

Verdict: Trend confirmed — in-venue streaming and interactivity gained traction.

Public Safety and Real-Time Surveillance

What happened in 2025: Official global market reports show live streaming technology, including cloud and AI, is increasingly adopted across sectors, including public safety and predictive analytics for incident response. While direct public safety case studies from government agencies are less centralized, wider market growth signals broader real-time usage.

Verdict: Trend directionally supported by industry growth data.

REMI and Cloud Production will Become Mainstream

What happened in 2025: Cloud-based live production tools have become a viable alternative to on-premise setups. For instance, AWS Live Cloud Production infrastructure lets producers scale live sports content without dedicated local hardware, aligning with broader industry adoption of cloud workflows.

Red5 actively demonstrated real-world REMI and cloud production workflows using TrueTime Studio™ for Production in live events and broadcast scenarios. At the NAB 2025 Show, Red5 and partners including AWS, Zixi, Nomad Media, and Videon showcased how TrueTime Studio tools enable remote multi-view live production and collaboration across distributed teams, proving that cloud-based, low-latency production workflows are viable and increasingly adopted in professional broadcasting environments.

Watch our demo from IBC 2024.

Verdict: Trend confirmed — cloud and REMI workflows are widely used.

Red5’s Predictions for the Live Streaming Industry in 2026

Maria Artamonova, our Head of Marketing, asked me to share what I think is coming next for real-time video. The space has moved fast this year, and I expect that pace to accelerate. Here are the shifts I believe we will be talking about in 2026.

Watch the video version of this section on Youtube.

The Move from WebRTC to MOQ Begins for Real-Time Workflows

Watch my short video on this topic on Youtube.

MOQ will not be fully finalized in 2026, but it will reach a point where real deployments become practical. Our upcoming end-to-end work with CacheFly is one example. Once MOQ proves itself at scale, I expect it to become a first class protocol for real-time video. It will remove the long-standing concerns about WebRTC “not scaling,” which have always been more perception than reality. MOQ will give the industry a clean slate and a modern protocol designed for massive distribution. Learn what MOQ is and how it compares to WebRTC in our previous blogs.

Real-Time Betting, Gaming and Interactive Sports Experiences Grow Faster

We are already seeing early momentum in betting and interactive gaming. That growth will continue as low latency becomes a requirement rather than a luxury. One to many real-time streaming will finally hit its stride. With protocols like MOQ maturing, the friction that slowed these use cases will start to disappear.

AI Becomes Essential in Live Workflows, not Just an Add-On

The conversation around AI in streaming has been dominated by chatbots and basic automation. In 2026 we will see something more meaningful. AI will be used inside the stream itself for detection, moderation, content cleanup, blurring unsafe material, and improving broadcast quality in real time. This will affect everything from live sports to creator platforms to newsroom workflows.

Watch my short video on this topic on Youtube.

User Generated Real-Time Broadcasting Becomes Mainstream

Newsrooms, entertainment formats, and creator platforms will lean more heavily on real-time participation. Bringing remote guests into live shows, integrating viewers into interactive formats, and blending live commentary with instant audience input will become standard. The idea of the “second screen” will fade, because phones, TVs, tablets and VR displays now share the same level of importance.

Early Steps Toward Practical AR Glasses for Live Video

Headsets are still bulky and too niche for most users, but smaller form factors are on the way. Meta’s progress with lightweight AR glasses, including early versions of its Quest line, suggests we are getting closer to practical wearable displays that can support real-time video overlays. Lightweight AR glasses with real-time video overlays may not fully break out in 2026, but they will get close enough for the industry to take notice. Meta’s progress with lightweight AR glasses, including Meta AI and Orion AI models, suggests we are getting closer to practical wearable displays that can support real-time video overlays. Once real-time AR becomes viable, it will unlock new categories across sports, training, field operations and entertainment.

Real-Time Video Will Be Less Underestimated and More Commonly Used

Many teams still assume low latency HLS or HESP is “good enough.” It is not. These formats solve part of the problem but were never built for true interactivity. As soon as scalable real-time protocols (MOQ in particular) become accessible, the market will shift. The industry continues to underestimate how quickly this will happen and how much demand there is for real-time use cases once the technical barriers fall.

Conclusion

These changes point to a simple truth. Real-time video is moving from a specialized capability to an expectation, and 2026 will be the year the industry starts behaving accordingly.

What trend do you predict will shape real-time video in 2026? And what is one trend you think the industry is still underestimating? Share your thoughts in the comments on my LinkedIn post, I would love to hear different perspectives.

Try Red5 For Free

🔥 Looking for a fully managed, globally distributed streaming PaaS solution? Start using Red5 Cloud today! No credit card required. Free 50 GB of streaming each month.

Looking for a server software designed for ultra-low latency streaming at scale? Start Red5 Pro 30-day trial today!

Not sure what solution would solve your streaming challenges best? Watch a short Youtube video explaining the difference between the two solutions, or reach out to our team to discuss your case.

Chris Allen is the co-founder and CEO of Red5, with over 20 years of experience in video streaming software and real-time systems. A pioneer in the space, he co-led the team that reverse-engineered the RTMP protocol, launching the first open-source alternative to Adobe’s Flash Communication Server. Chris holds over a dozen patents and continues to innovate at the intersection of live video, interactivity, and edge computing. At Red5, he leads the development of TrueTime Solutions, enabling low-latency, synchronized video experiences for clients including NVIDIA, Verizon, and global tech platforms. His current work focuses on integrating AI and real-time streaming to power the next generation of intelligent video applications.