Video streaming delay can make even the best live experience feel out of sync. In this blog, you will learn what causes video streaming latency and how to solve these issues to keep your streams smooth, responsive, and truly real-time. Watch my short talk on this topic on YouTube. What is Video Streaming Delay? Latency… Continue reading Video Streaming Delay: What Causes It and How to Fix It

Video streaming delay can make even the best live experience feel out of sync. In this blog, you will learn what causes video streaming latency and how to solve these issues to keep your streams smooth, responsive, and truly real-time.

Watch my short talk on this topic on YouTube.

Table of Contents

What is Video Streaming Delay?

Latency is the delay between when data is sent and when it is received. In streaming, this delay applies to both video and audio signals, affecting how natural and uninterrupted a live experience feels. Latency is introduced at multiple points: video and audio encoding, packet transmission across the network, buffering, and decoding on the playback device.

A related concept is latency jitter, which refers to fluctuations in that delay. Instead of a steady, predictable latency, the delay can vary from moment to moment, causing video to freeze, audio to skip, or streams to drift out of sync.

There are three main types of latency in streaming, each serving different use cases and protocols:

- Standard latency – Typically 15–30 seconds, common in HTTP-based protocols like HLS and MPEG-DASH, suitable for large-scale content delivery.

- Low latency – Around 3–10 seconds, achieved with optimizations such as Low-Latency RTMP, often used for sports, live events, and news.

- Ultra-low latency – Under 1 second, achieved with WebRTC, WHIP and WHEP, SRT, and similar real-time protocols, designed for interactive applications like video conferencing, auctions, and online gaming.

What Really Affects Latency in Live Video Streaming and How to Fix It?

When we talk about live streaming, latency is often the first metric that comes up. At Red5, we work with clients from around the world, and one of the first questions they ask when testing Red5 Pro or Red5 Cloud is how much distance actually matters. The answer is: it depends.

First of all distance always adds to the latency, but how much that matters depends on your use case and the current traffic conditions at the moment.

Every millisecond adds up of course. The further your server is from the viewer, the longer it takes packets to travel. Even though data moves at the speed of light via fiber optics, the internet is not a straight line. It is a network of routers, switches, and hops that all introduce delay.

Here are a few key factors that affect latency.

Local Network Conditions

How local network conditions affect streaming delay

Often overlooked, local conditions like distance from Wi-Fi router, walls blocking the connection and massive amounts of usage in the network can have a drastic effect on the performance and thus latency. For example, if a viewer is connected to Wi-Fi in a room separated from the router by several walls, the signal loss and interference can cause noticeable latency increases or buffering during a live stream.

How to fix: Use a wired Ethernet connection whenever possible to eliminate Wi-Fi interferences. If Wi-Fi is the only option, position the router closer to the streaming device or use a mesh network to strengthen coverage. Reducing the number of connected devices and upgrading to a dual-band or Wi-Fi 6 router can also improve stability and lower latency.

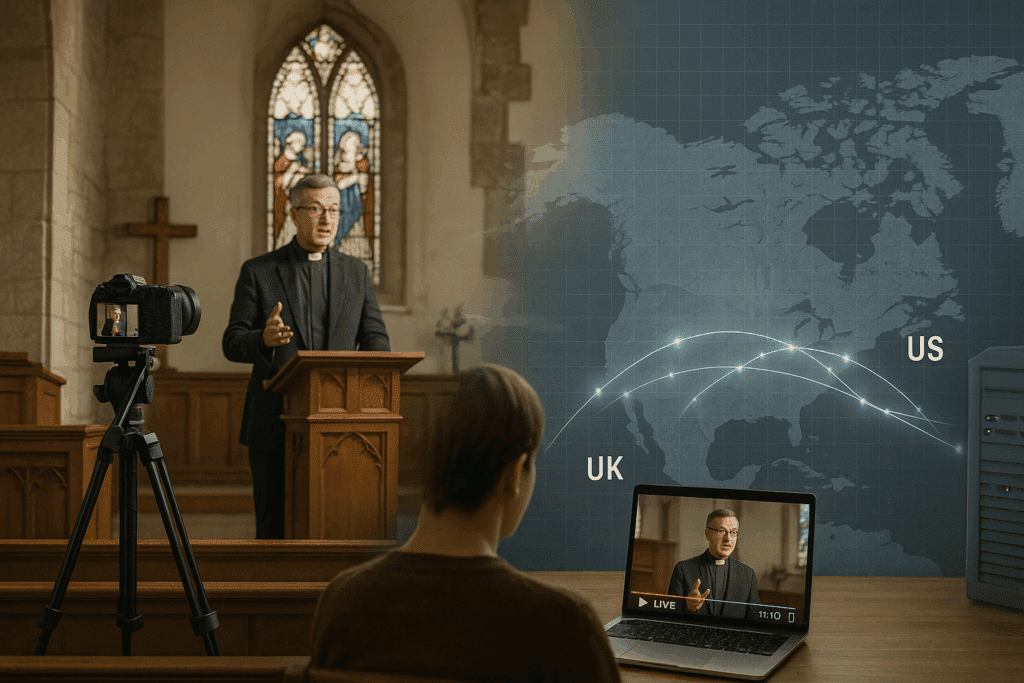

Distance between server and viewer

How distance between server and viewer affect streaming delay

The farther apart they are, the longer data takes to travel. For example, if your video hosting center is located in the US but you’re broadcasting from a church in the UK to UK viewers, the video stream must travel across the Atlantic and back, adding noticeable latency compared to hosting the server regionally.

How to fix it: Choose a server or cloud region that’s geographically close to your main audience. Using a distributed infrastructure or CDN with global edge locations helps minimize the distance data needs to travel, reducing latency for viewers no matter where they are.

Network routing

How network routing affect streaming delay

Internet traffic rarely follows a direct path, and each extra hop adds delay. For example, a stream might be routed through several intermediate servers across different countries before reaching the viewer, causing unnecessary delays even if both the broadcaster and audience are in the same region.

How to fix it: Work with a streaming provider or CDN that offers optimized routing and private backbone networks to minimize unnecessary hops. Implement multi-region routing or intelligent traffic steering to ensure data takes the most efficient path between broadcaster and viewer.

Packet loss

How packet loss affect streaming delay

Dropped packets must be retransmitted, increasing both latency and jitter. For example, during a live sports broadcast, if the network link between the on-site camera rig and the cloud encoder experiences interference or temporary signal loss, some data packets may be dropped and need to be resent, causing brief playback stutters or delays in the live feed.

Packet loss is one of the greatest culprits in increasing latency of live streams.TCP-based protocols are fairly the worst because every packet needs to be transmitted in order before the next one can get through. This is why RTMP is notoriously bad with latency on poor networks and benefits from having origin servers near the client to make it work well.

UDP traffic is much better because not every packet needs to be delivered. But that said, it’s still not perfect. With protocols like WebRTC and SRT critical packets need to be re-sent, and the client must wait for these to be delivered before rendering the video. This backup also contributes to latency.

How to fix it: Use UDP-based protocols like WebRTC or SRT for live streaming instead of TCP-based ones such as RTMP, especially in environments prone to packet loss. Deploy origin or edge servers closer to your source to shorten transmission paths and reduce retransmission delays. Additionally, enable forward error correction (FEC) and adaptive bitrate streaming to help maintain a smooth experience even when some packets are lost.

Congestion and bandwidth limits

How congestion and bandwidth limits affect streaming delay

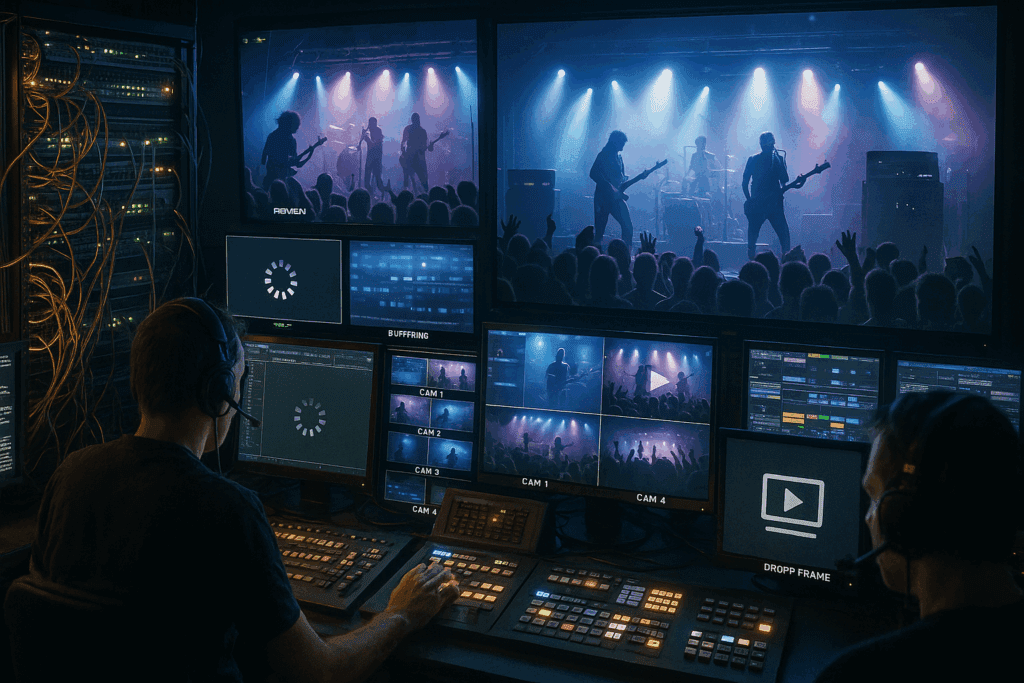

Busy networks and limited throughput slow down data delivery. For example, during a large live event production, multiple camera feeds, graphics systems, and monitoring stations may share the same network, creating congestion that leads to buffering, dropped frames, or noticeable delays in the live broadcast output.

How to fix it: Segment your production network to dedicate sufficient bandwidth for live video traffic and prevent competition from nonessential data. Use network monitoring tools to identify bottlenecks in real time, and configure Quality of Service (QoS) rules to prioritize video and audio packets. When possible, upgrade to higher-capacity switches or dedicated fiber links to ensure stable throughput during high-demand events.

Encoding and decoding time

How encoding and decoding time affects streaming delay

Even efficient codecs like H.265 or AV1 introduce some processing delay. For example, during a live news broadcast, the encoder may take extra milliseconds to compress each video frame before transmission, especially when handling multiple camera feeds or high-resolution footage, slightly increasing the overall end-to-end latency.

How to fix it: Use hardware encoders or GPUs optimized for real-time video compression to minimize processing delay. Lowering the resolution or bitrate slightly can also reduce encoding load without a major quality loss. For time-critical workflows, choose faster codecs like H.264 with low-latency presets or tune encoder settings (such as GOP size and buffer length) for real-time performance.

How the Right Architecture Maintains Real-Time Streaming Latency

What makes all of this so tricky is that conditions are never constant. You might get smooth playback one hour and noticeable lag the next.

That is why the architecture behind your streaming platform matters. It’s best to play it safe and get your endpoints talking to servers that are as close as possible. At Red5, we built our Experience Delivery Network (XDN) to minimize these variables and keep latency consistently under 250 ms worldwide. By placing servers closer to users, optimizing packet handling, and eliminating unnecessary hops, we make live streaming truly real.

Conclusion

Streaming latency isn’t caused by one single factor. It’s the result of network distance, routing, congestion, packet loss, and device performance all adding up. The good news is that most of these issues can be managed with the right setup. By optimizing your network, choosing the right streaming protocol, and using an architecture like Red5’s XDN, you can maintain consistently low latency around the world.

Try Red5 For Free

🔥 Looking for a fully managed, globally distributed streaming PaaS solution? Start using Red5 Cloud today! No credit card required. Free 50 GB of streaming each month.

Looking for a server software designed for ultra-low latency streaming at scale? Start Red5 Pro 30-day trial today!

Not sure what solution would solve your streaming challenges best? Watch a short Youtube video explaining the difference between the two solutions, or reach out to our team to discuss your case.

FAQs

What is latency in live streaming?

Latency in streaming is the time delay between when a video is captured and when it appears on the viewer’s screen. It’s measured in milliseconds or seconds and results from the time needed to process, encode, transmit, and decode video data over the internet.

Does latency affect streaming?

Yes, latency directly impacts streaming quality and viewer experience. High latency can cause noticeable delays between real-time events and playback, making live interactions feel sluggish. It’s especially critical for use cases like auctions, gaming, and video calls where real-time responsiveness matters.

How does latency affect video streaming quality?

Latency affects how “live” the stream feels. Even when video quality looks sharp, delays between the broadcaster and viewer can make communication awkward and reduce engagement. In some cases, packet loss or buffering caused by high latency can also degrade overall playback performance.

What can cause live streaming delay?

Several factors can cause delay, including distance between servers and viewers, weak Wi-Fi connections, high network traffic, or inefficient encoding settings. Each step adds milliseconds that accumulate into noticeable lag during a live broadcast. Optimizing these elements helps minimize delay.

What is a live streaming delay called?

A live streaming delay is commonly referred to as latency. Depending on its length, it may also be described as “broadcast delay” or “stream lag.”

How to reduce delay in live streaming?

To minimize delay, use ultra-low latency streaming protocols like WebRTC or SRT, choose servers close to your viewers, and ensure strong network conditions. Adjusting buffer sizes, using hardware encoders, and optimizing bitrates can also significantly reduce lag in live broadcasts.

Chris Allen is the co-founder and CEO of Red5, with over 20 years of experience in video streaming software and real-time systems. A pioneer in the space, he co-led the team that reverse-engineered the RTMP protocol, launching the first open-source alternative to Adobe’s Flash Communication Server. Chris holds over a dozen patents and continues to innovate at the intersection of live video, interactivity, and edge computing. At Red5, he leads the development of TrueTime Solutions, enabling low-latency, synchronized video experiences for clients including NVIDIA, Verizon, and global tech platforms. His current work focuses on integrating AI and real-time streaming to power the next generation of intelligent video applications.