AI in live streaming is unlocking new levels of automation, insight, and real-time responsiveness that were previously impossible. With Red5’s upcoming AI-detection service and real-time frame extraction, video content can now be analyzed, enhanced, and acted upon in milliseconds. In this blog, you’ll learn what we discussed at IBC 2025, how Red5’s technology enables instant… Continue reading AI Detection Is Set to Transform Live Streaming

AI in live streaming is unlocking new levels of automation, insight, and real-time responsiveness that were previously impossible. With Red5’s upcoming AI-detection service and real-time frame extraction, video content can now be analyzed, enhanced, and acted upon in milliseconds. In this blog, you’ll learn what we discussed at IBC 2025, how Red5’s technology enables instant frame extraction across HTTP and XDN infrastructures, what new AI-driven use cases this makes possible, and how it transforms the speed and intelligence of live video streaming.

Our co-founder and CEO, Chris Allen, talked about ‘AI and the Next Wave of Real-Time Video Intelligence’ at the RTC.On conference in Poland this month. If you prefer video format, watch this recording on Youtube:

Table of Contents

New Service Works with HTTP as well as Red5 Cloud XDN Infrastructures

Barriers to getting the most out of AI-assisted per-frame applications tied to live-streamed A/V content are about to fall with Red5’s forthcoming introduction of a fully automated AI-detection service supporting the market’s first real-time frame extraction process.

The emergence of Generative AI supporting deep-learning Large Language Models (LLMs) has triggered a vast array of capabilities that can be used with the aid of hardware-accelerated cloud processing to activate streamed A/V use cases that once were out of reach. The full potential of what can be done with these tools has yet to be attained owing to the time consumed by pulling frames for AI processing.

Now, by enabling for the first time anywhere virtually instant extraction of A/V frames at any frequency down to sub-second intervals from content delivered over any supported by Red5 ingest protocol ( WebRTC / WHIP, SRT, Zixi, RTMP, RTSP ) going into Red5 Cloud streaming infrastructures, Red5 has opened a new perspective on what can be done in the live-streaming environment. The possibilities truly are unlimited, especially when combined with the capabilities unleashed by Red5’s Experience Delivery Network (XDN) Architecture on the Red5 Cloud real-time streaming platform.

Diagram illustrating real-time frame extraction and encoding for AI in live streaming using SRT

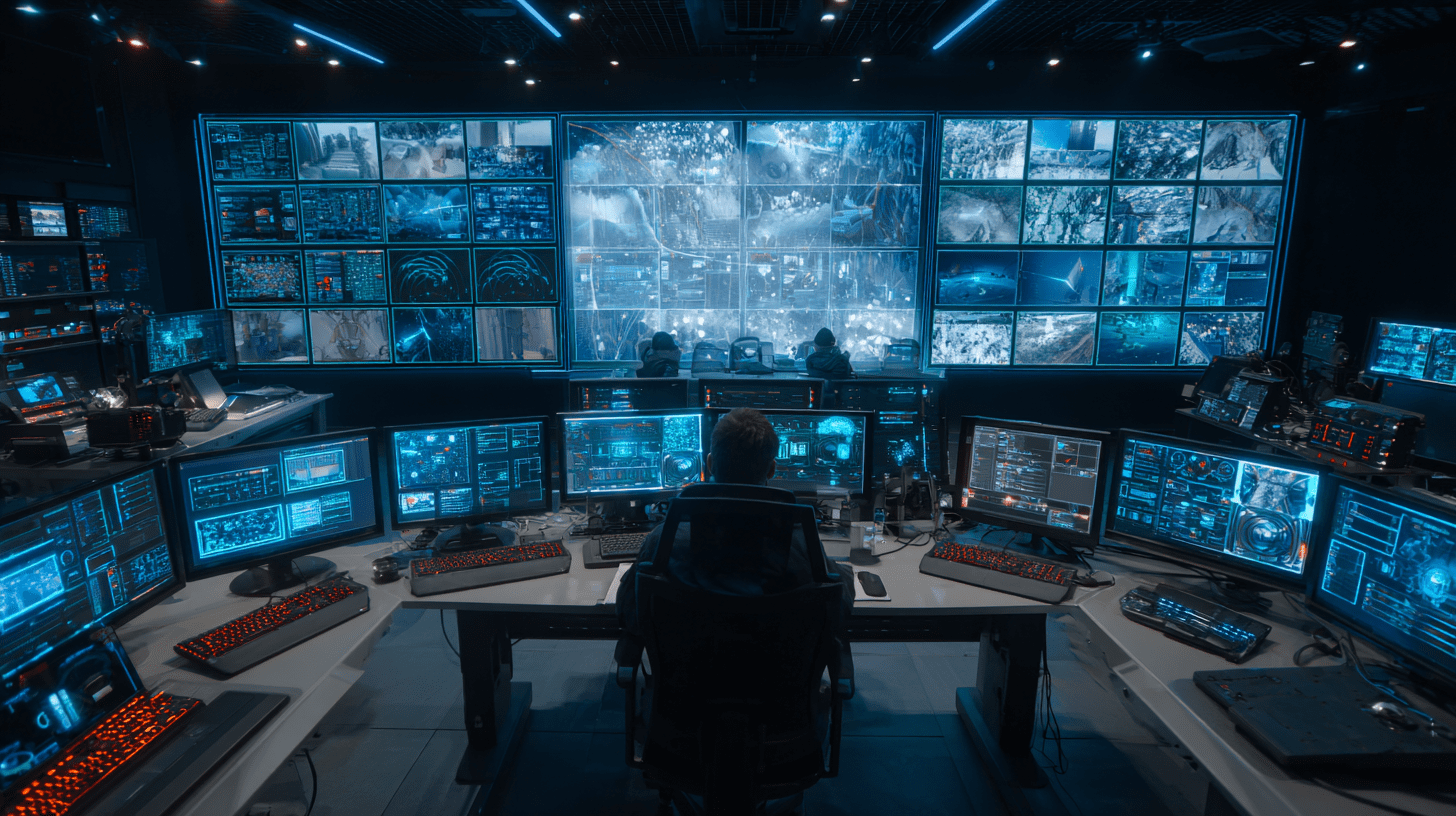

Some AI-related applications enabled by real-time A/V frame extraction relate to surveillance or other scenarios where there’s a need to detect specific objects or faces, variations in street and highway traffic patterns, fires and other emergency-related developments, criminal activity, and other types of developments in the live stream flow, including unwanted audio or video elements such as swear words or indecent exposure. Other applications have to do with pulling and formatting high-quality still images to capture key moments in sports competition, generate promotional thumbnails, or pinpoint defective parts in factory production, to name just some types of use cases that can benefit in ways far beyond what once could only be done by extracting screenshots from stored files.

While there are many frame-extraction solutions that have improved on the screenshot capture model, they typically lag real time by a few seconds or more, which delays the application of AI for whatever types of analysis, content manipulation and formatting are used with the extracted frames. In contrast, the Red5 solution extracts frames in milliseconds from high-latency HTTP-based streams as well as from streams delivered at real-time speeds (WebRTC typically) over XDN infrastructures implemented on the managed Red5 Cloud service.

Conventional and Real-Time Streaming Options

When HTTP Live Streaming (HLS), MPEG-Dynamic Adaptive Streaming over HTTP (DASH) or another conventional streaming mode is involved, the post-production playout stream is delivered as usual over conventional CDNs to end users while, at the same time, the feed is ingested by the XDN Architecture for real-time frame extraction at whatever intervals users set on their Red5 extraction dashboards. In this approach, there’s no end-to-end distribution involving egress from a cloud XDN infrastructure to end users.

Instead, as dictated by the user, the extracted frames are pushed into S3 or another cloud-based object storage platform, where the user’s chosen AI mode of processing can be applied instantly to execute the relevant tasks. Depending on the speed of the AI processing, those tasks can often be completed before the HTTP-streamed primary content reaches end users, especially in cases where the streaming entity employs low-latency backend connections to the storage repositories. Moreover, the Red5 Cloud extraction process allows users to choose settings on their service portals that automate whatever transcoding and formatting is required to support their uses of the extracted frames, all in real time.

Alternatively, in instances where streamers have activated real-time streaming end to end over Red5 Cloud XDN infrastructures, there’s no need to push the extracted frames into storage. Instead, distributors can instantly apply the LLM solutions as part of the in-stream flow using AI tools that have been pre-integrated with the Red5 Cloud service or other options once they’ve been integrated through Red5’s open APIs for use on the platform. Depending on the use case, the AI-processed frames can be streamed in real time as part of the primary feed or sent separately over the XDN infrastructure to end users or whatever social media, website or other domain is used to display the output.

Critically, Red5 has taken an AI-agnostic approach to ensure the broadest range of applications are available for use cases with real-time frame extraction. As mentioned, Red5 is pre-integrating some LLM models for use on the platform and will continue doing so over time, based on our assessment of best-of-breed performance options. But, at the same time, users are free to bring other AI models into play based on our ability to integrate virtually any solution that employs open interfaces compatible with the industry standards used with our APIs.

As used here, real time refers to the fact that anything labeled as such is transpiring at latencies so low they are imperceptible, at least to us humans, in contrast to flies on the wall that perceive a millisecond as a long time. End-to-end streaming over XDN infrastructure doesn’t exceed imperceptible 250ms latencies at any distance so long as distance doesn’t push light-speed latency above that threshold, and, of course, frame extraction in just a few milliseconds meets that stipulation as well.

XDN latency parameters, which can drop into the 50-ms range when transport is limited to intra-regional distances, are unmatched by any other platform at any scale, let alone at scales into the millions of end users supported by XDN Architecture. This is because, as explained at length in ‘Keys to Optimizing End-to-End Latency with WebRTC‘ blog, Red5 has gone well beyond the latency-reducing capabilities of WebRTC and the underlying Real-time Transport Protocol (RTP) to cut latencies typically introduced by encoders, transcoding, stream mixing, multi-transport protocol ingestion and the delivery of personalized ads and content enhancements on a per-user basis.

Red5’s approach to frame extraction ensures the end-to-end latency remains very low when AI processing is employed with XDN streams. And, as noted earlier, when traditional higher-latency streaming is in play, Red5’s real-time frame extraction will enable AI processing on frames passed into storage to be conducted in tandem with or ahead of HTTP streaming latencies.

A Wealth of In-Stream AI Detection Use Cases

Beyond enabling the use of real-time frame extraction to support AI generation of standalone images, these capabilities will have a transformative impact on in-stream use cases wherever they’re put into play, thanks to the power of currently available AI solutions that can be programmatically applied on the Red5 Cloud platform to execute tasks at warp speed. For example:

- Extending across all usage scenarios, one of the most widely needed live streaming applications performed by AI tools entails instant speech-to-text generation for closed captioning in multilingual environments. Red5 has already been employing pre-integrated applications of the Nvidia Parakeet LLM for audio to support this use case in the run-up to our launch of real-time frame extraction.

- In sports, news, and other types of live-streamed program production AI can locate and fulfill the need for adjustments like noise reduction, image stabilization, and automatic color correction.

- In live-stream post-production and playout, AI can be used to enhance user experiences with personalized graphics, visual effects and animations with the ability when needed to adjust or replace backgrounds.

- AI tools can have a major impact on interactive games in 2D and 3D immersive scenarios with the ability to analyze and shape game play in response to players’ movements, facial expressions, virtual weapon purchases and much else.

- Treatment through telemedicine can be greatly improved with real-time AI-assisted diagnostic analysis complementing caregivers’ observations of patients on their video feeds.

- Retailers can use AI models designed to analyze consumer behavior, help with inventory management and suggest ways to increase product sales.

- In any manufacturing environment, AI can be applied to implement adjustments in quality control, predictive maintenance, and improvements in worker safety.

- An especially significant game changer in this era of heightened property security monitoring and wide-area surveillance camera usage is the role AI can play performing object, facial and activity detection that can’t be done on live feeds manually. Red5’s support for real-time frame extraction on individual streams complements our existing support for real-time surveillance across multiple camera feeds enabled by the TrueTime DataSync and Multiview for Surveillance toolsets, as described in this blog.

The Role Played by XDN Architecture

All the capabilities stemming from Red5’s support for real-time frame extraction are made possible by the unique attributes of XDN Architecture as executed on the Red5 Cloud Software-as-a-Service (SaaS) platform. The full end-to-end real-time multidirectional streaming supported by Red5 Cloud is automatically implemented in accord with user requirements on the global Oracle Cloud Infrastructure (OCI), which spans 50 geographic regions on six continents.

OCI server instances dedicated to Red5 Cloud are automatically configured in hierarchical Origin, Relay and Edge Node clusters for each user based on numbers and locations of end users, streaming volume and other parameters input by users on their service dashboards. Everything that occurs on an XDN infrastructure, from how resources are configured and backed up to how they’re functionally orchestrated, is controlled by the pervasively deployed software stack comprising the XDN Stream Manager.

The architectural component serving both the users who want to perform real-time frame extraction on HTTP streams and those streaming end to end in real time over XDN infrastructures utilizes functionalities in and proximate to the Origin Nodes, where any incoming stream delivered from H.264, H.265, or VP8 encoders at resolutions all the way to 4K can be ingested. XDN ingestion, along with accommodating direct HLS and WebRTC streams, encompasses a comprehensive portfolio of transport modes used with playout contribution and camera feeds, including Real-Time Streaming Protocol (RSTP), Real-Time Messaging Protocol (RTMP), enhanced RTMP (eRTMP), Secure Reliable Transport (SRT), Zixi Software-Defined Video Protocol (SVDP), and MPEG Transport Stream (MPEG TS).

For streamers activating the Red5 Cloud real-time frame-extraction service on HTTP streams, it’s up to them what mode of transport they use to upload the frames to storage for AI LLM processing. In the case of users who are delivering streams to end users employing the real-time transport capabilities of RTP over the Red5 Cloud platform, the service automatically chooses whichever RTP-compatible framework is best suited to each user’s device.

WebRTC is the most used real-time streaming mode owing to the client-side support provided by all the major browsers, including Chrome, Edge, Firefox, Safari and Opera, which eliminates the need for plug-ins or purpose-built hardware. Alternatively, if a mobile device with built-in client support for RTSP is receiving the stream, the platform transmits via RTSP. The client-optimized flexibility of XDN architecture also extends to packaging ingested RTMP, MPEG-TS and SRT encapsulations for transport over RTP when devices compatible with these protocols can’t be reached via WebRTC or RTSP.

In all instances involving HTTP- or XDN-based streaming, the Red5 Cloud frame-extraction service employs Red5’s unique cloud-hosted real-time transcoding process to deliver extracted frames in whatever resolutions and bitrate profiles users choose on the service portal. Our real-time Cauldron stream processor running on OCI commodity servers employs our native code modules or Brews to initiate and configure stream scaling and compression resources through a Multiple Independent Re-encoded Video (MIRV) processor.

These MIRV-configured resources allow us to decode and split a received asset for recompression into multiple bitrate profiles at negligible contributions to latency. By building our software transcoder from scratch rather than relying on FFMPEG or third-party solutions we’ve shaved hundreds of milliseconds off the typical latency performance using CPUs without additional hardware acceleration.

Conclusion

We’ll be posting many more details about the new Red5 Cloud real-time frame extraction and AI support service as we move to commercial launch, including listing of the compelling LLMs we’ll be integrating at the outset for specific use cases. At this point we can share that service availability will be facilitated by its inclusion as a Red5 Cloud application in the Amazon Marketplace.

But, there’s no need to wait in preparations for the official launch. Feel free to get started now by contacting us at info@red5.net or scheduling a call.

Try Red5 For Free

🔥 Looking for a fully managed, globally distributed streaming PaaS solution? Sign up for Red5 Cloud today! No credit card required. Free 50 GB of streaming each month.

Looking for a server software designed for ultra-low latency streaming at scale? Start Red5 Pro 30-day trial today!

Not sure what solution would solve your streaming challenges best? Reach out to our team to discuss your case.

The Red5 Team brings together software, DevOps, and quality assurance engineers, project managers, support experts, sales managers, and marketers with deep experience in live video, audio, and data streaming. Since 2005, the team has built solutions used by startups, global enterprises, and developers worldwide to power interactive real-time experiences. Beyond core streaming technology, the Red5 Team shares insights on industry trends, best practices, and product updates to help organizations innovate and scale with confidence.