Last week we teamed up with Brightcove and hosted the Boston streaming video meetup at our Boston offices. This was intended as an intro to video streaming technologies and the various approaches to reducing latency. Thiago Teixiera, Alex Barstow, and Chis Allen all presented on three different approaches to live streaming latency: HLS, CMAF, and… Continue reading 3 Approaches to Live Streaming Latency: Boston Video Dev Meetup

Last week we teamed up with Brightcove and hosted the Boston streaming video meetup at our Boston offices. This was intended as an intro to video streaming technologies and the various approaches to reducing latency.

Thiago Teixiera, Alex Barstow, and Chis Allen all presented on three different approaches to live streaming latency: HLS, CMAF, and WebRTC.

As we’ve covered before, live streaming latency in video comes from all the complexity involved in sending large amounts of data over the internet. No matter which way you choose to stream your video, there are some things you have to do no matter what. The video and audio must be captured and encoded, transferred via a transport protocol over the internet, processed by a media server, distributed via a cluster of other servers, transported back over the internet via another protocol, and finally decoded and rendered by the client viewing the stream.

With conventional streaming protocols, that entire process can take anywhere between 20 – 30 seconds. This graphic below (pulled from Thiago Teixeira’s presentation) shows the process for a typical stream sent over HLS.

The full lifecycle of a video stream

Luckily, video streaming has advanced to finally be able to produce real-time latency to millions of viewers. Read more to find out how it all led to where we are today.

HLS

Alex Barstow, a Software Engineer at Brightcove, covered Apple’s HTTP Live Streaming (HLS). HLS is currently the most widely used standard, and the highest latency protocol that was covered at the Meetup.

As an adaptive bitrate streaming protocol, HLS breaks down the stream into small Transport Streams segments (.ts). HLS then uses an extended M3U playlist (.m3u8) to list the different quality streams that the client can request.

Demonstrating the structure of the Master Playlist and all the segments contained therein.

HLS enjoys native support on Safari (both macOS and iOS), Chrome on Android, Microsoft Edge, and streaming service devices (Roku, Android TV, etc.). Using the Media Source Extensions API, HLS expands to desktop-based Chrome and Firefox. This wide range of supported platforms along with the large scale deployment by Apple catapulted HLS to a de-facto standard.

If you’ve followed our blog at all, you are probably thinking to yourself, “Why isn’t WebRTC listed in that graph?”.

Well, as it turns out, when Bitmovin sent out the survey for their 2019 report, WebRTC wasn’t one of the available options. Until recently WebRTC was not seen as a viable one-to-many streaming option. Obviously we at Red5 Pro have successfully disproved that theory. It will be interesting to see if the 2020 report makes any updates.

CMAF + MPEG DASH

Thiago Teixeira, also from Brightcove, then discussed the more complex (and flexible) MPEG-DASH (Dynamic Adaptive Streaming over HTTP). DASH is an international standard ratified by ISO and MPEG. MPEG itself is the standardization body for audio and video. Used together, they are a codec agnostic protocol widely used over the web.

The idea behind MPEG-DASH was to combine all the different quality renditions into a single manifest file, rather than separate media playlists like with HLS. Despite this reduction in file size, the rest of the design is quite similar to HLS leaving MPEG-DASH with the same latency and many of the same limitations.

Breakdown of different quality streams contained in a DASH file.

After the implementation of HLS and DASH, this resulted in a situation where multiple files needed to be stored on CDN networks –responsible for stream distribution– in order to support playback on various devices.

With that in mind, Apple and Microsoft proposed CMAF to the Moving Picture Experts Group with a common format that would use fragmented mp4 containers. This innovation cuts down on storage and distribution costs as the same file does not need to be encoded multiple times to support different devices. CMAF is able to achieve sub-three second latency using chunked-encoding and chunked-transfer encoding. This is a major improvement over standard HLS and MPEG-DASH.

Ahhh… much cleaner.

However, CMAF still isn’t fast enough for real-time live streaming which brought us to our last topic.

Red5 Pro and WebRTC

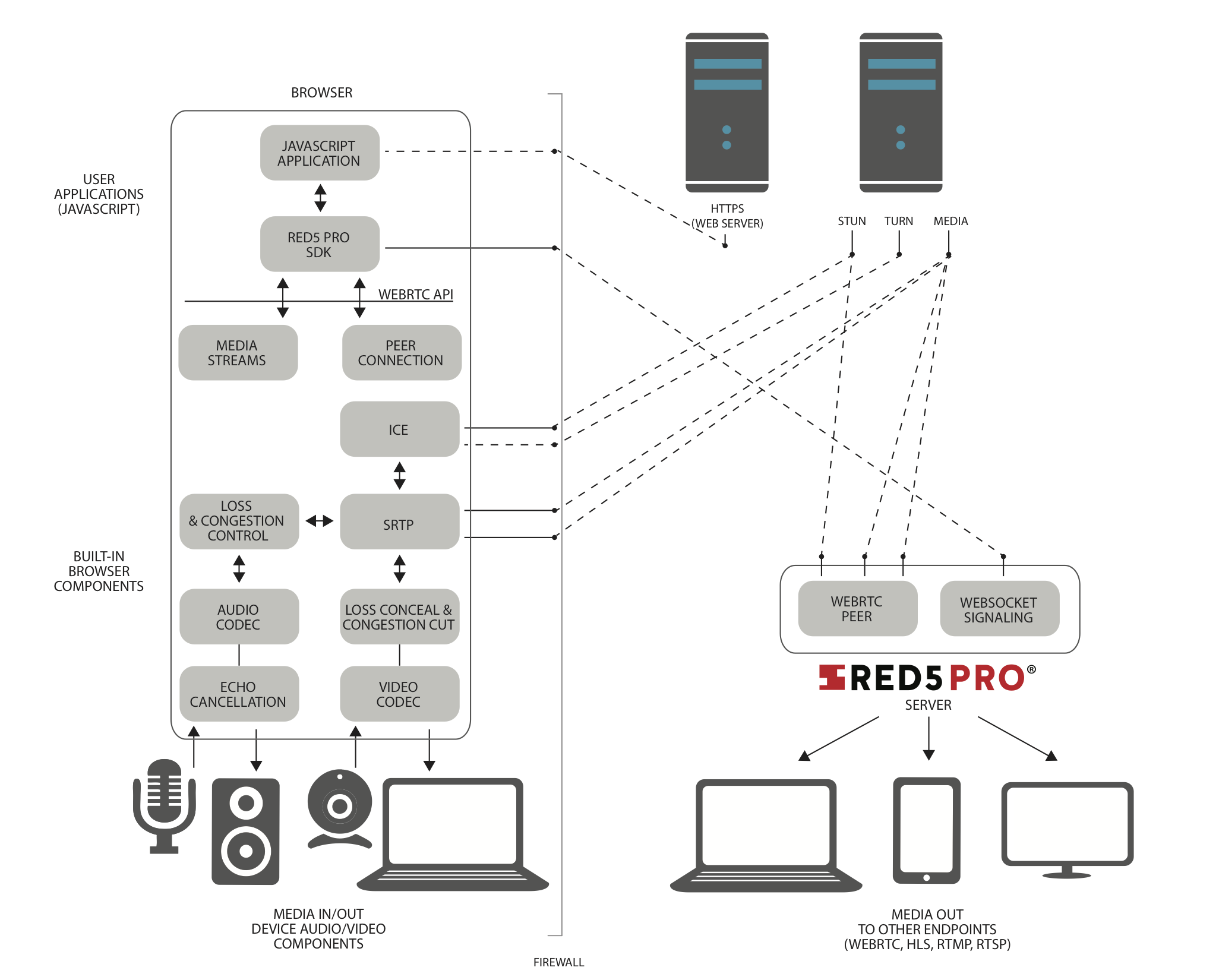

Red5 Pro CEO and technical co-founder Chris Allen covered how the Red5 Pro implementation of WebRTC provides sub 500 milliseconds of latency while remaining fully scalable. With wide support from all the major internet browsers, WebRTC is supported by a large variety of devices. As Chris explained, the downside to the one to many WebRTC approach was the complexity that went into building the solution. As anybody who’s played around with WebRTC knows, there are a great number of moving parts involved.

The distribution flow for WebRTC flowing from built-in browser components to user applications, then over to the web server for final consumption by the media out endpoints.

As shown above, WebRTC goes through a complex process of setting up a peer to peer connection involving STUN/TURN signaling and ICE negotiation. A detailed breakdown of the signaling and negotiation process can be found in this blog post.

The high consumption of computing resources involved in setting a peer to peer connection has led some to the false belief that WebRTC cannot scale. By leveraging cloud infrastructure, Red5 Pro’s Auto Scaling Solution can spin up (or spin down) new server instances in real-time to deal with increases and decreases in the number of publishers or subscribers.

The Stream Manager serves as the main point of control spinning up new origin servers for broadcasters and edge servers for subscribers. An overview of this process can be found in this blog post or a detailed explanation in Red5 Pro’s documentation page.

Moving beyond that, Chris outlined our latest innovation: the Cross-Cloud Delivery System (CCDS). Through the use of Terraform, the CCDS allows users to leverage a wide variety of global data centers. This facilitates the delivery of live streams wherever they are needed, reducing hosting costs and improving the process of live streaming video.

Distribution of global data centers available for stream distribution from major internet service providers.

The Red5 Pro stream Manger uses the Terraform cloud controller to connect to a variety of cloud providers.

Keep an eye out here for more meetups and information about our exciting CCDS. For those who don’t want to wait, request the whitepaper that outlines the entire process.

Of course, if you have any questions or just want to find out more about Red5 Pro please send an email to info@red5.net or schedule a call. You can also check-out a demo of our sub-500 milliseconds of live streaming latency right here.

Overall, it was an insightful event and we’d like to thank Bitmovin for making it a success.