When we talk about real-time streaming at Red5, we often use the phrase “Streaming at the Speed of Thought.” It is not just a tagline. It is a very literal target and mission. In this blog you will learn how human perception, neuroscience, and physics shape what “real-time” actually means in practical streaming systems. Watch… Continue reading Streaming at the Speed of Thought: How Human Perception Affects the User Experience

When we talk about real-time streaming at Red5, we often use the phrase “Streaming at the Speed of Thought.” It is not just a tagline. It is a very literal target and mission. In this blog you will learn how human perception, neuroscience, and physics shape what “real-time” actually means in practical streaming systems.

Watch my short talk on this topic on YouTube.

Table of Contents

The Science Behind the “Speed of Thought”

Neuroscience tells us there is a gap between when something hits our senses and when we consciously register it. Depending on the task, the “Speed of Thought” typically falls somewhere between about 50 and 200 ms, with values around 150 ms often cited for simple reactions like a runner responding to a starting gun. Once you get under roughly 400 ms end to end, most people cannot consciously detect a delay, as long as everyone in the experience is seeing the same thing at roughly the same time.

More recent work out of Caltech puts another interesting number on this. If you look at the actual information throughput of our conscious processing, it is on the order of 10 bits per second, versus roughly a billion bits per second coming in through our senses. The authors jokingly call the belief that we can “upgrade” this like a modem the “Musk illusion.” Our brains are not anywhere near as instant as our networks. If you prefer video format, watch this video.

So when we say we want streaming video “at the Speed of Thought,” what we really mean is this. We want the full pipeline, from the camera to the viewer, to stay under the thresholds where human perception starts to complain, and we want all participants to stay in sync with each other.

Humans Are Part Of Your Latency Budget

Most product teams obsess over encoder settings, buffer sizes, and protocol overhead. That is important. But we often forget that people themselves add latency.

A few examples from the research and from real deployments:

- Reaction time. Average visual reaction time is around 250 ms, and even highly trained people rarely get below 150–190 ms.

- Eye movements and focus. Shifting your gaze with a saccade and refocusing on a new target typically takes on the order of 200 ms when you include the delay before the eye movement even starts. Larger gaze shifts with head movement take longer.

- Switching views. Moving your attention from a phone screen in your hand to a huge LED board in a stadium, or from the field to a TV over the concourse, is not free. By the time you notice something happened, decide to look, move your head, and refocus, hundreds of milliseconds have already gone by.

This is why I push back when someone insists they “need” 50 ms latency for a use case that is fundamentally visual and spread across a large physical space. If fans are in a stadium, watching the field, the jumbotron, and overhead TVs, they are not operating like a lab experiment staring at a single pixel.

In-Stadium Streaming, Physics, And Fan Experience

Watching a basketball game in a stadium.

We have been doing a lot of work around in-stadium streaming, and this is where the difference between physics and perception gets very real.

One recent conversation was with a team that wanted every TV in a football stadium to track the live action on the pitch with less than 100 ms of delay from the field. On paper that sounds great. In practice, with their encoder and network constraints, it was extremely hard to hit.

A goal missed because of human perception at the stadium.

The funny part is that they were already hitting something closer to 400 ms quite reliably, which is well within the “Speed of Thought” window for most people. By the time a goal is scored, the crowd roars, and you look up from your drink to the nearest screen, you have already burned more than that in human reaction and eye movement. You simply cannot perceive that extra couple of hundred milliseconds in that context.

In my recent blog on real-time in-stadium streaming, I talked about this in more detail: how real-time video inside the venue makes overhead screens, mobile apps, and broadcast operations all feel like a single coherent system instead of three slightly different timelines. For more detail, check out our in-stadium streaming solution page. It outlines what Red5 offers for venues and operations teams working on this type of experience.

The Speed Of Sound, Thunder, And Why Lip Sync Is Hard

Vision is surprisingly forgiving. Audio is not.

The speed of sound in dry air at 68 °F is about 343 m/s, and it changes with temperature and humidity. Stadium sound engineers know this cold. They deliberately introduce delays on different speaker arrays so that, for a given seating zone, the sound from the nearest speaker hits your ears at roughly the same time as the sound originating from the stage, or at least close enough that it feels natural. This is basic time alignment.

Lightning is visible before thunder is heard.

You have seen the classic version of this on a stormy night. Lightning and thunder happen at essentially the same moment, but you always see the lightning first and hear the thunder later because light travels almost a million times faster than sound.

In a stadium or arena, the same principle shows up in the most painful UX problem of all. Lip sync.

There is a surprisingly small window where the brain is happy with audio and video lining up. For broadcast and film, acceptable lip sync error is often kept within a few tens of milliseconds, and controlled tests suggest that people can detect audio leading or lagging the video once you get past something like 40–100 ms depending on the direction.

Now put this in a real venue.

- A singer is on stage, projected on huge LED screens.

- A drummer is hitting a snare that you see both directly and on the screen.

- The physical sound from the stage takes time to travel to you.

- The sound from the PA system is delayed and shaped differently depending on your section.

- The video chain has its own latency that is effectively tied to the speed of light, so it arrives “instantly” compared to the audio.

If the screens and the sound system are not designed together, you get that unpleasant feeling that the mouth and the voice are slightly off, or the drum hit is visually out of step with what you hear.

Those are the hardest cases. Not the scoreboard graphics, not the instant replay, but making a live performer on stage feel coherent with a distributed audio and video system that is bound by the speed of sound.

How Red5 and Partners Bring “Speed Of Thought” Into The Physical World

Integration with Amino Media Players

A lot of this becomes very practical when you think about the infrastructure needed to deliver these experiences.

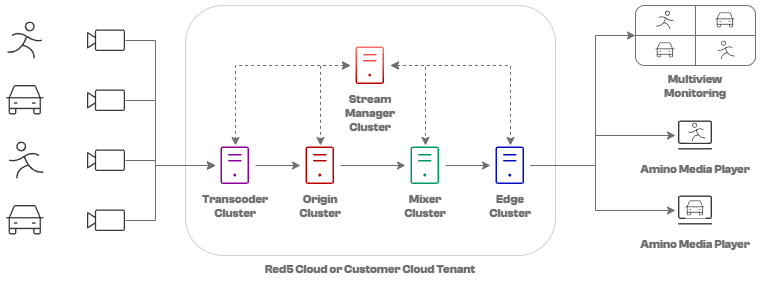

With Amino, we have been working on exactly this intersection of physics, human perception, and operations. Together we integrated Red5 real-time streaming with Amino media players and their Orchestrate device management platform. That combination gives you sub-250 ms latency streams delivered to hundreds or thousands of endpoints, with on-premises deployment and full remote monitoring.

Red5 and Amino deployment diagram.

For stadiums and arenas, that means you can stream live sports to TVs and LED boards across the venue, keep endpoints synchronized within the “Speed of Thought” window, and still respect operational constraints like limited crew and tight security requirements.

The same pattern shows up in other real-world environments that care about timing and trust:

- Remote monitoring and surveillance.Security operations centers watch walls of screens that represent real people and assets. You want real-time situational awareness, but you also want on-premises control, low jitter, and reliable device management across large estates.

- Secure transactions. Think digital signatures or identity verification, where a clerk and a customer need to see the same thing in sync and confirm it in real time.

- Retail betting and general retail. In betting shops, last-second bets only feel fair if the displays across the shop are aligned and closely track the live event. In retail, video-assisted consultations only feel authentic if the conversation feels like a conversation, not a sequence of delayed clips.

In all of these, you do not need zero milliseconds of latency. You need predictable latency that sits below the thresholds of human perception, and you need the ability to keep many endpoints in sync within that window.

Integration with Osprey Video Talon Encoders

We achieved similar results in our work with Osprey Video’s Talon encoders, which are widely used in professional production, live events, houses of worship, and broadcast environments. The goal was to pair Osprey’s reliable, field-proven encoding pipeline with Red5 real-time streaming to create an end-to-end workflow that stays comfortably within the “Speed of Thought” performance envelope.

Diagram illustrating how 4K streaming works with Osprey encoders and Red5 Pro or Red5 Cloud.

The integration enables Talon encoders to deliver 4K streams into Red5 Cloud or Red5 Pro clusters with sub-100 ms end to end latency at 60 FPS, providing high-quality real-time video that stays stable even under challenging network conditions. Some of use cases that benefit from the Red5 and Osprey Video solution include

- Live Sports Streaming: High latency breaks the experience. Fans expect real time at the venue or at home, and as watch parties and other interactive features grow, sub-second video becomes essential.

- Live Sports Betting: Bets must be placed and processed on current information. Even small delays cause missed opportunities or unfair advantages.

- GovTech: Sub-second streaming for traffic and runway monitoring, smart cities, secure air-gapped video streaming and real-time drone feeds for public safety and defense.

- Surveillance and Security: Sub-second streaming enables real time monitoring and faster response to breaches or suspicious activity.

- News Broadcasting: Breaking updates need real time delivery so anchors, reporters, and viewers stay in sync and avoid talk-over.

- Online Auctions and Bidding: Sub-second latency keeps bids synchronized for all, increasing participation and final sale values.

- Online Casinos and Gambling: Live dealer and in-play wagers depend on real time video and data to maintain integrity and engagement.

- House of Worship Streaming: Broadcast religious services to remote congregations with secure, real-time video.

- Remote Production Workflows: Manage multiple contribution feeds on-premise and push them to the cloud for collaborative production.

Designing For Real Humans, Not Just Lower Numbers

The big lesson for me, and what we talk about internally a lot at Red5, is that nothing is truly instant, including your users. There is latency in the camera, in the encoder, in the network, and in the player. There is also latency in the eyes, ears, and brain on the other side.

When we design real-time systems, especially for environments like in-stadium streaming, drone operations, smart city surveillance, live auctions, or sports betting, the question should not only be “how low can we get our latency number.” The better questions are:

- Which parts of this experience need to be aligned at the speed of sound and perception, and

- Where is “speed of thought” more than enough as long as everything stays in sync?

Those answers will differ for an in-arena concert, a stadium full of screens, a remote betting shop, or a traffic operations center. The physics stays the same, but the perception does not.

Conclusion

Streaming at the Speed of Thought is ultimately about designing real-time systems that work within the limits of human perception, not chasing abstract latency numbers. When video, audio, and every endpoint stay aligned inside that sub-400 ms window, the experience feels instant, natural, and trustworthy. The goal is predictable, in-sync delivery that matches how people actually see, hear, and react in the real world.

Try Red5 For Free

🔥 Looking for a fully managed, globally distributed streaming PaaS solution? Start using Red5 Cloud today! No credit card required. Free 50 GB of streaming each month.

Looking for a server software designed for ultra-low latency streaming at scale? Start Red5 Pro 30-day trial today!

Not sure what solution would solve your streaming challenges best? Watch a short Youtube video explaining the difference between the two solutions, or reach out to our team to discuss your case.

Chris Allen is the co-founder and CEO of Red5, with over 20 years of experience in video streaming software and real-time systems. A pioneer in the space, he co-led the team that reverse-engineered the RTMP protocol, launching the first open-source alternative to Adobe’s Flash Communication Server. Chris holds over a dozen patents and continues to innovate at the intersection of live video, interactivity, and edge computing. At Red5, he leads the development of TrueTime Solutions, enabling low-latency, synchronized video experiences for clients including NVIDIA, Verizon, and global tech platforms. His current work focuses on integrating AI and real-time streaming to power the next generation of intelligent video applications.